Part one: Introduction and logics

In our

enlightened society, we tend to look down on those that blindly follow

religious doctrines. We accuse them of being ignorant and close-minded, not

questioning unreasonable claims by their authorities, and when challenged,

resorting to rhetoric or aggression rather than logic to defend their views.

Perhaps

phrased a bit extremely, this is the essence of the attitudes of most ‘civilised’

societies that are based on scientific knowledge. Science encourages critical

thinking about the world, and gains knowledge by performing experiments that

help us figure out how the world works. Religion, they say, only makes up facts

that happened in the past to explain things in our everyday lives. That is more

or less the way they tend to think.

But…are

those who follow science really that different? Take a moment to think about

the last time you actually thought critically about what you read in a science

magazine.

It does not take much intellectual process to question a fact or opinion. ‘I think this is wrong, because of A’, where A is fact you have learnt that contradicts that claim. This is not what I would call critical thinking, something our modern society values highly, yet tends to misuse so often it is almost frightening.

It does not take much intellectual process to question a fact or opinion. ‘I think this is wrong, because of A’, where A is fact you have learnt that contradicts that claim. This is not what I would call critical thinking, something our modern society values highly, yet tends to misuse so often it is almost frightening.

Critical

thinking is more

about questioning the essence or nature of a statement or piece

of knowledge. Thinking critically is not to question a claim with another claim

that contradicts it and saying that one of them is wrong – that is just

pointless argument. When thinking critically, you challenge the claim per se

(lat. ‘in itself’), by assessing its fundamental reasoning. An example of

critical thinking is detecting fallacies – specific cases of flawed

logical reasoning – such as circular reasoning and false dilemma,

both which are surprisingly common in science. (Fallacies will be discussed

more in detail later.)

Having this

in mind, ask yourself again: when did you last think critically about a scientific

statement?

Would you

then agree with me that, in general, we only rarely – if ever – do this?

Hopefully, this should shock you, not only because you have realised that we

then are not much different from the blindly religious people we denounce as ‘ignorant’

or even ‘brainwashed’, but also since you understand and appreciate the

importance of critical thinking and realise what we are lacking.

If we want

to justify our trust in science, we need to be able to convince ourselves (and

eventually others) of why scientific knowledge is ‘better’ than, say, religious

beliefs. To do that, we need to look at the essence and nature of science, and

compare it with the essence and nature of other ways of obtaining knowledge,

e.g. religion.

This is not

an easy task, and it should not surprise you why so few are even able to

go through with it, since not many are familiar with how scientists reason

(ideally). We all know that they perform experiments and observe

results. Some of us further are aware that the scientist interpret the results

of the experiments within a theoretical framework, use it to generalise

about similar situations, and so add to our collective knowledge. Moreover,

some know of the importance of replicability (that the experiments

should be repeatable), and probably fewer have heard about falsificationism (that

ideas are confirmed not by trying to support them, but by trying to destroy

them and not succeeding).

But how does

all of this really help us understand the world? Why do we need to perform

experiments? Why do we want to generalise? And why should the experiments be

replicable? Why does failing to disprove something make it more reliable than

if you can show that it is true? How do all these things interrelate and

connect to form a comprehensive whole? In short, what are the theoretical

reasons for why science is a good method for making sense of the physical

world?

That is what

this post series will be all about: assessing the scientific method, by

bringing to light how it works, and thinking a lot about what makes it good and

what problems it faces. In other words, we will critically think about the

strengths and weaknesses of the scientific method.

Note that I am not intending to criticise science, but to make us think critically about it, or evaluate it. There is a difference in purpose, which is really important that

you do not misunderstand. I am not going to say that science is wrong, only that it is not as right as many people think it is.

Indeed, it may very well seem as I am pointing more toward its flaws and

limitations, but that is mostly because that is the part the general public is

less familiar with, I believe. I will of course also emphasise the really good

aspects of science.

Honestly,

considering all that science has achieved, most indisputably shown in its

practical applications, it is clear that blindly arguing that science is all

bad and wrong is just silly. All I wish to achieve is to encourage you to think

for yourself about the way you see science, and whether that view is properly

justified, or whether you might need to think again.

In order do

this, we first need a solid introduction to the essentials of reasoning and

logic, and a detailed walk-through of the scientific method. This will include

a whole bunch of new words and definitions that you are probably unfamiliar

with, but please bear in mind that understanding these fundamental concepts is

key to understanding the rest, so I strongly urge you not to skip this part. In

coming posts, I will go through each main step in the chain of the idealised

scientific reasoning, analysing them in detail in the light of what we will

have learned earlier. I will also address other central concepts of science,

such as models, operationalisation, paradigms, the ‘data first vs. theory

first’ discussion, and also the role of mathematics.

Logics – the

structure of an argument

Perhaps in

contrary to what most people think about logics, it is all actually about the structure

of the arguments, and the purpose of this structure is to preserve

truth when reasoning beyond what is already known. In logics, we

want to be able to build on what we know in a way that we can be certain

that what comes out of it is as true as the things we base it on. I will soon

show you why, but first you might wonder why logic would be useful.

What I said

above is basically that ‘logical’ is not the same as ‘true’. ‘Logical’ means

that it follows a format in which the conclusion (the output of a chain of

reasoning) is as true as the arguments you base it on (the input). If you start

with true statements and reason logically, you will end up with true

statements. If you start with some true and some false statements, logical

reasoning will not result in statements you can rely on to be true.

Why, then,

should we bother about logic? It does not seem that helpful if we cannot get

anything out of it that is truer than what we already have. One could thus see

logic as redundant, since it cannot take us any closer to the truth, since it

does not ‘improve’ truths.

I would

agree that this is a limitation in some cases, but it all depends on how you use logic. What we ideally want

to do with logical (and scientific) reasoning is to extrapolate knowledge. To extrapolate is basically to take something you know or expect to be true in one

case and apply the same knowledge to a related but different case, so that you

can learn more about that second case without needing to observe it directly. (With

more formal wording, I would define extrapolate as: extending an application of

a method or conclusion to a related or similar situation, assuming it is

applicable there as well.) You want to be able to pick out the essence of

something, and use that essence to say something more about similar things.

I happen to like bananas, pineapples and

mangos very much. These are all fruits from the tropics. They have that feature

in common (their geographic origin, and also climate). Therefore, I can conclude

that I probably like all or most tropical fruits. I would like to be able to

tell whether I will like coconuts, without trying them first. Coconuts are also

tropical fruits. I can then extrapolate my earlier conclusion to this other

tropical fruit, and say with some confidence that I will probably like

coconuts. This is an extrapolation.

This type of dummy-proof phrasing is mostly

to give you a taste of what is to come when we dive deeper into logics, but I

promise I will not be as obnoxiously explicit most of the time – only when I

really want to be crystal clear.

Anyhow, hopefully you now understand what

extrapolation means. It is a critical concept in the philosophy of knowledge,

and, actually, we do this nearly all the

time without thinking about it. It is instinct – an intuitive way of reasoning – because it is incredibly useful.

Without applying our knowledge to other areas, we would be stuck with only

knowing what we can see with our own eyes and hear with our own ears. (In a later post, we will come to the potential dangers

of that as well.) Extrapolation allows us to break free from the chains

of our limited self and explore other worlds we only have hunches about.

When you are about to cross the street, you

assume the approaching car will stop, because other cars have in the past. When

your friend offers you a new sweet he or she thinks you might like, you accept

it because you like usually like sweets, and because you usually like what he

or she offers you.

When NASA is searching for life on other

planets, it is looking for signs based on what we believe allows us to live on our planet (atmosphere, dynamic planet

interior, water, carbon, etc.). When biochemists test new drugs on lab rats,

they assume the results in the rats will be similar to the consequences of

giving it to a human. Geologists are currently trying to work out whether east

Africa is about to split off from the rest of the continent, based on what clues

they have about such events in the past and what they observe today and

interpret as indicators of a continent splitting.

Extrapolations can be made in many other

areas as well, e.g. social sciences, and others where it is less obvious, such

as mathematics and art. If you are a keen mathematician or artist, take a

moment to think about when and for what purpose you use extrapolation in your work.

I hope you now begin to understand the

importance of logic as well. If you think about it, none of these examples are

more certain than the facts we base them on. I am actually not as fond of fresh

coconut as I am of the other tropical fruits; the hasty conclusion that I might

like all tropical fruits was evidently not true. I strongly doubt that every car you have met when crossing a

street has stopped for you; therefore, you cannot be absolutely certain that

the next driver will stop – the extrapolated statement that the next car

probably will stop before you becomes uncertain because it is based on every

car so far having stopped, which is not quite the case. I can bet that your

friend occasionally gives you something you surprisingly happen not to like,

and you have surely come across some sweet that just was not your thing.

If you understand the importance of

extrapolating knowledge, and thus the importance of logical reasoning, we can

start to look at the structure of logic.

The most basic way of structuring an

argument – basic in the sense that it is simple and clear, and therefore easily

brings out its essence – is making it into a syllogism. Syllogisms consist of two (or more) so-called premises and one conclusion that follows necessarily

– i.e. logically – from the premises. Let me give you a classic example:

P: All bachelors are unmarried men

P: Peter is a bachelor

C: Therefore, Peter is an unmarried man

I let the Ps

indicate premises and the C indicate the conclusion; it is not necessary, but I

want to avoid confusion.

So, that’s a syllogism!

It is that simple! Just to make sure you get the hang of it, try to restructure

this into a syllogism: Because John was late for class, and all latecomers must

be punished, he will sit in detention for an hour after school.

P:

P:

C:

I made it a bit

tricky for you, but, in fairness, few arguments you hear will be laid out so

plainly that you can make a syllogism by just copying and pasting the words. A key to good reasoning is to almost instinctively think in terms of syllogisms

– whenever you hear an argument, rearrange it in your head – and you will start

to notice how many times per day you hear incomplete reasoning all around you. That is one of the strengths of this

format: it is so easy to spot gaps in arguments.

Take a simple

example: ‘Fossils are remains of past life, so evolution must have occurred in

the past.’ For a start, you cannot make a syllogism with only one premise – not

without it becoming silly or redundant. You need to add at least one more

premise, preferably one that fills the jump between fossils and evolution –

i.e. shows the connection. If we add that ‘fossils are more or less different

from now-living organisms’, it starts to make more sense. Or does it? We have

still not quite touched upon the subject of evolution yet. ‘Evolution is

organisms changing over time’ could be just what we need. Shall we try it out?

P: Fossils are remains of past life

P: Fossils are more or less different from

now-living organisms

P: Evolution is organisms changing over

time

C: So, evolution must have occurred in the

past

Although the

wording does not quite fit neatly, the line of reasoning now emerges as more or

less complete. It needs some more polishing, but it makes more sense now that

some large holes have been filled.

Now that you are

more familiar with the layout of syllogisms, I can introduce the concepts of

validity and soundness.

In logics, a valid argument is one where the

conclusion follows necessarily from the premises. For an argument to be

valid, its conclusion must be an indisputable and inevitable consequence of the

premises. Note that validity and truth are

not the same: arguments can be valid without being true. I will show you

how very soon.

A sound argument is one that is valid

and with true premises. If the premises are true, and the conclusion is

a necessary consequence of them, the argument is sound. Soundness can be

thought of as roughly synonymous with

truth.

To really show the

difference, I think it is best if we consider some (pretty fun) examples.

The validity of an argument is all about structure: we want a water-proof

chain of thoughts, and focus on that only. The truth or falseness of the

premises is dealt with later – it is not of our concern at this stage. We only

want to make sure that the reasoning is correct.

A perfectly valid

syllogism could therefore be:

P: All bings are bongs

P: Ding is a bing

C: Therefore, ding is also a bong

Here is another

example of validity, which I took from a handout for a philosophy class:

P: All ostriches are teachers

P: Richard is an ostrich

C: Therefore, Richard is a teacher

Albeit utterly

nonsensical, both are valid. There is no sensible way of questioning the logic

in these statements, regardless of how absurd they sound. If you have trouble

seeing why, try exchanging the words for symbols or letters. I like to use

uppercase letters in italics, but you can choose any of your preference; there

is no rule for that.

P: All As

are B

P: C

is an A

C: Therefore, C is also a B

This is, so to

speak, a general formula for a valid syllogism. There are many other general

forms of valid statements, but this is the standard. Try exchanging A for ‘bird(s)’, B for ‘dinosaur(s)’ and C

for ‘(a) chicken’.

With the above

example, we are moving toward a sound

syllogism, i.e. one where the premises are regarded as true. Validity is a requirement for soundness, and so is that

the premises are true. The latter can be much more difficult to show, so we should not be hasty to call an argument

sound only because we believe the

premises to be true – a dangerously common pitfall – we must be absolutely certain, and there are few

ways to achieve that.

One type of

premises that are indisputably true are those that are true by definition. Look

back at the first example, the one about bachelors being unmarried men: if a

person is not a man or not unmarried, then he/she simply is not a bachelor. Thus, all bachelors are unmarried men, because

if they are not, then they cannot be appropriately called bachelors.

What a silly thing

to be arguing about, right? Where does that lead us? All it does is divide the

world into bachelors and non-bachelors, and allows us to say something about

all bachelors – that they are men and unmarried – and something about all

non-bachelors – they are either not men, married, or both cases. Sounds daft.

Or? We will return to this in some later post,

because it opens up to a quite intriguing discussion, but it is not my purpose

to drag you into it here and now.

A second way a

premise can be regarded as true is if it is the conclusion of a previous sound

syllogism. How come? Consider this generalised example:

Syllogism 1

P: All As

are Bs

P: C

is a B

C: Therefore, C is also a B

Syllogism 2

P: C

is a B

P: All Bs

are D

C: Therefore, C is also D

If you regard

Syllogism 1 as sound, it means you must accept its conclusion to be true. If

that conclusion is used as a premise in the next syllogism, that premise must

be regarded true, as it is the conclusion of a sound argument. If the remaining

premise also is true, then the valid Syllogism 2 is sound, and its conclusion

must be regarded as true as well – and can be used as a premise for more

syllogisms, if you so wish.

Anyhow, I hope you

begin to realise that syllogisms really are quite simple, as long as you have

grasped the essence. But, what is the

point of this? Why this structure? Why do we want valid arguments? Why do

we want sound arguments?

Please take a

moment to reflect on those questions yourself before reading on. I want you to

get into the habit of thinking for yourself about the meaning and importance of

the things you hear.

I hope you reached

some interesting conclusions, and, for the better, that they were different

from mine. That way, you might gain double insight into the purpose and

usefulness of syllogisms.

One purpose of the

particular layout of syllogisms is to put it plainly and simply, so that anyone

and everyone can understand it and see the logic (or lack thereof) easily. In

short, it is all about clarity. In

addition, it can be thought of as a way of systematically approaching

intellectual problems.

Validity is, in

essence, equal to ‘logic’, in the sense that it is a means of preserving the truth. (Remember my first

statement in this section?) If you start with true premises and reason validly,

you will end up with truth (note that this is then a sound argument). If you

start with false or not entirely true premises and reason validly, you are

unlikely to reach a true conclusion, though it may be possible in theory:

P: All potatoes are presidents of the US

P: Obama is a potato

C: Therefore, Obama is a president of the

US

If you start with

truth and reason invalidly, then you

have no clue of what you end up with. For all we know, you can start with false

premises, reason invalidly, and – by sheer luck! – reach a true conclusion.

However, you cannot be certain about its truth, since you cannot show logically

how you came to it. Even though it may be true, if you cannot show it

logically, you may have a hard time convincing others. (See the next syllogism

example below.)

The one thing you can

be absolutely certain about is that you can never

ever start with true premises, reason

validly and end up with a false conclusion. If your conclusion is

false, then either the premises cannot all be true, or the argument cannot be

valid. This is so because of the definitions

of validity and soundness: they are defined so that, when combined as I have

shown you, truth is a necessary and inevitable result; the key issue is showing

that an argument is valid and that the premises are true – i.e. the task is to show that your argument is sound, because, once you have, it must be accepted as true. That is

all you need to do, but it is easier said than done!

Take these lessons with care! Beware not to misuse what you have now learned. Although showing

the validity of a statement is relatively easy, showing its soundness – i.e.

the unquestionable truth of its premises – can be nearly impossible in many

many many cases. Falsely describing an argument as sound, perhaps just because

you agree with the conclusion, is a pitfall we have all fallen into, and

probably will many times over again. We should all take caution not to announce

something as sound unless we can be absolutely sure!

It is also easy to

be fooled into thinking that an invalid argument is valid. Think about this

one, taken from another handout on logics:

P: Vegetarians do not eat pork sausages

P: Ghandi did not eat pork sausages

C: Therefore, Ghandi was a vegetarian

The both premises and the conclusion are all true, but the

argument is actually invalid! You might see this more clearly if you try it

with symbols:

P: All As

are not Bs

P: C

is not a B

C: Therefore, C is an A

It only happens to

be, that, in this case, C is one of

the non-Bs that also are As, but since not all non-Bs are As, the conclusion cannot be regarded as

a necessary consequence of the

premises. Since the argument is not valid, it is not sound either.

Does that make it less true? No, not at all, but it makes

it less convincing to critics. We

have no guarantee that the conclusive statement is true (let us disregard the

fact that the second premise can be questioned and focus on the problem of

invalidity now), since we cannot show that it is a necessary consequence of the

premises. The conclusion is questionable, even though the premises are not.

Detecting

invalidity is not an easy task for a beginner. You need to drill yourself in

the art, and the best way to begin is to start breaking everything down into

syllogisms, and exchanging the key words for symbols if required. Eventually,

it might become second nature to you, and you can ‘feel’ gaps in arguments more

and more easily.

However, there are

some common types of invalid reasoning called fallacies. These are, so to speak, pre-described logical pitfalls.

They are distinct special cases of invalid reasoning, but strikingly common. I

will give you some examples here.

Post hoc ergo

propter hoc is Latin for ‘after this, therefore

on account of this’, and refers to when you assume that that because B

follows from A, then A is the cause of B, or, in other words, assume that correlation means causation.

This reasoning can

be stated as a syllogism:

P: Whenever A occurs, B follows

P: Whenever B occurs, so has A

C: Therefore, A is the cause of B

Why this is

invalid might not be obvious straight away, because it looks structurally

correct. However, you must note that occurrence and cause do not have a two-way connection. Since occurrence

and cause are not invariably connected, the conclusion does not follow

necessarily from the premises; thus, the argument is invalid.

If A causes B, they will naturally occur together or after one another; however,

looking at it from the other end, just because A and B occur in close

association, there is no guarantee that

either causes the other. For example, there

may very well be another factor, C,

which causes both!

A really simple

example, but that one might not think of immediately, is that although night is

followed by day, night is not the cause

of day. (Both are caused by the Earth’s rotation with respect to sunlight

coming from only one direction.)

This is a fallacy

made often in science, and also in other areas of knowledge. However, the scientific method is beautifully capable of

dealing with that issue, which will be shown in a later post, when we explore

the scientific method model in detail. Ideally, if we notice that two

phenomena are correlated, it should give us a hint that it might be worth

investigating whether the connection could be causal or just coincidence; we

must at least be careful not to jump to conclusions!

Equivocation is the use of different meanings of a word in the

same argument. Take this example from a philosophy handout:

P: A hamburger is better than nothing

P: Nothing is better than good health

C: A hamburger is better than good health

The ambiguous use

of the word ‘nothing’ makes the argument nonsensical. Strictly, however, the

syllogism is formally valid, since

the conclusion does follow

necessarily from the premises. This is a very important limitation of logics, because there is no method to deal

with this. Discussing such reasoning will almost always boil down to arguing about the meaning of the words, as so many

arguments tend to do.

This problem is

probably not more or less common in science than in any other area of

knowledge; ambiguity is an inherent problem in language, introduced by our

desire for variation in ways of describing things. The aesthetics of language

has thrust a wedge through an aspect of logics.

Ad hominem means ‘against the man’, and is the fallacy of attacking or supporting the person instead of the argument.

Clearly, the invalidity is a result of completely missing the point. Instead of

examining the statement per se, you

accept or refute it because you like or dislike the person that made the claim.

It may very well

be that this person you like and agree with is

right, but only in the same way that invalid reasoning could result in a

true conclusion: by sheer luck. There

is a possibility that the person is correct, but it is not guaranteed. Thus, it

is a type of fallacy.

In science and history, this problem is

more familiar under the term bias,

which is favouring or disfavouring a

view, person or group, usually in an unfair way. Especially keen students

of history should be aware of the importance of carefully assessing the degree

and direction of bias in a source, and constantly taking care to circumvent the

angled view of the writer. In science, the problem

is less pronounced thanks to the principles of replicability and falsification,

which will be explained in detail at a later point.

It is the same

problem when we appeal to what the majority

of the people believe in. Indeed, if most believe in something, there ought to

be a good reason for that belief, or else it would probably not have been so

wide-spread. However, think about times when society considered slavery, racism

and sexism acceptable and even natural. (Note that this case has little

relation to logics: in past times, these horrendous things were the order of

the day because it had been so for long, and the people in power saw no good

reason for change; it is a matter of social norms, far removed from strict

logical reasoning. In the same way, trusting in the opinion of the vast

majority is not logical reasoning, but simply a matter of trust.)

A sort of grey area is when we trust authorities. Especially

in modern times, when our collective knowledge is so vast and deep that a

single individual simply cannot know or try out everything for him-/herself, we

must, in practice, trust authorities

in relevant areas that we have less experience in or knowledge of.

I am sure many of

us are eager to know what gravity is. Really, what is it? What makes a body

attract others just because it is bigger? That just makes no sense in itself. A

good friend tells me there is mathematical proof for it, but when I ask her to

explain it, she cannot even give me the basics. Evidently, she does not

understand the proof well enough to summarise it to someone who has not done

much physics. How can she then claim that there is proof for gravity? Her physics

teacher probably told her so, and maybe even demonstrated it, but the

essentials are too esoteric (i.e. understood only by very few people with

highly specialised knowledge or interest) for her to fully grasp the concept.

Perhaps she was convinced of it when she saw it demonstrated, but did not

assimilate (i.e. take in) the proof, only recognised and accepted it. What

happened here is that she relied on an authority in the relevant area (her

physics teacher) that the concept she did not herself understand, and perhaps

never will, was true. She placed her trust in that those who understand gravity

know what they are doing.

In practice, we have to rely on authorities in many many

cases; since we simply cannot investigate everything ourselves, we have no

choice but to trust others that have spent much of their lives examining the

particular field of knowledge. The best we can do is prioritise: investigate

in-depth only the parts we find most important to ourselves, and be content

with trusting people to do their thing right in other areas.

Is this bad? Not

in all cases! It would be arrogant not to acknowledge that there are plenty of people in the world that can do things better than

yourself. I know how clumsy I am in mathematics, so when my teacher gets a

different result, I naturally accept that I am probably wrong and try again. I

can be equally clumsy in a chemistry laboratory (unless we are handling

dangerous chemicals, in which case I naturally take uttermost care), so when

the ammeter (a device that measures electric current) reads really weird values

from an electrolytic cell (a set-up of various chemicals in a way that their

reactions produce electricity), I instinctively presume something is amiss with

my set-up, not that my odd results disprove the theory of electrolytic cells!

There are many more examples where I would prefer to trust an authority because

I am sure that he/she is a more capable investigator (in the particular area,

or in general).

What makes a good

authority? We do not want to rely on just about anyone; we want to be sure that

the person we place our trust in is worthy of it. This is a rather interesting

question, but its full complexity is not relevant here, so I will give only one

short, intuitive answer: the authority should at least be an expert in the relevant field of knowledge.

Argumentum ad

ignorantiam means ‘argument from ignorance’, or

otherwise phrased as ‘appeal to ignorance’. This is when someone claims something to be true because there

is no evidence to disprove it.

P: There is no evidence that A is false

C: Therefore, A must be true

Clearly, something

is lacking here. We need at least one more premise for the syllogism to be

complete. What if we do like this:

P: A must

be either true or false

P: There is no evidence that A is false

C: Therefore, A must be (or is probably) true

Now it is valid,

but justifying the new premise will be difficult. It may work in some cases,

but experience tells us that very few things are black and white: life is more

of a mixture of shades of grey. In fact, assuming the first premise to be true

without proper reason is another fallacy, which we will consider next.

False dilemma is when you,

without justification, assume that only

two alternatives exist. It is also referred to as binary thinking, or ‘black and white thinking’. Repeating what I

said above, clearly, this is not true in the vast majority of cases.

Sarcastically,

humourist Robert Benchley once said: “There

are two kinds of people in the world: those who divide the world into two kinds

of people, and those who don't”.

As a side comment,

I would like to add that science has put considerable effort to both show that many natural phenomena are

determined by a variety of factors that can interact with one another, and to explore how these function by attempting

to model them, chiefly with the help of mathematics. Although I expect that

there are scientist out there that have tendencies to commit these fallacies,

such errors tend to be checked and corrected by other scientists, so that,

overall, science works in the general direction of increasing the variety of

grey hues we are aware of.

To give an

example, consider the rate of a general chemical reaction. The rate of a

reaction is the speed at which it occurs or is completed, and naturally depends

on the reactiveness of the involved chemicals. However, countless experiments

have clearly established that the rate also depends to a strong degree on the

concentration (i.e. how many particles there are to react, and, really, how

tightly packed they are), temperature (i.e. how much the particles move about

spontaneously in a random direction) and pressure (i.e. collision frequency:

how often two particles meet) of the compounds. If a gas or liquid reacts with a

solid, the size of the solid is also important: one large clump has only a

small surface where the other substance can react (it cannot reach below the

surface until that goes away), while fine powder has many spots where it can

make contact with the fluid. In addition, there are certain substances that

speed up a reaction without being directly involved in it; these are called catalysts. Unless you have a special

case where you have reasons to suspect that one or two of these factors

overshadow all others so that they become negligible (ignorable), you cannot

apply binary thinking to chemical reaction rates.

False analogy is the

fallacy of (falsely) assuming that because two things are

similar in one or more respect they must be similar in another respect.

Normally, you would not assume that without a good reason; otherwise you will not

be taken seriously. I can think of many silly examples, such as: since squids

and knights have mantles, they must both be spineless; some a bit less silly:

rocks and tables are hard, so both should burn well; and some more realistic:

Joseph Stalin and Fidel Castro were both communist leaders that promoted

personal culture, so they must both have been paranoid and conducted mass

purges in their countries.

In general, people

in my experience have been careful with this (so coming up with examples was

actually really hard), perhaps because it is such an easy-to-spot fallacy: you

should not even need to set up a syllogism to notice such invalidity in an

argument.

Still, we can

sometimes be drawn towards such thinking, in particular when we want to extrapolate

information from one situation to another. (Recall the meaning of ’extrapolate’

from the beginning of this section.) It is therefore important to be conscious

of the risk of committing a fallacy when attempting to make extrapolations.

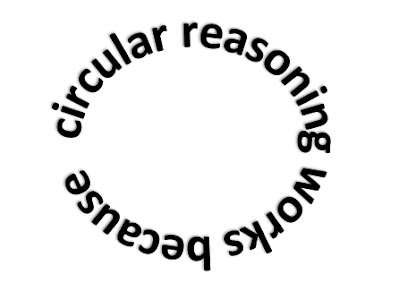

Circular reasoning is the

real pitfall in most rational disciplines. Circular reasoning is the act of assuming the truth of the very thing you

intend to prove. It is also known as a vicious

circle or begging the question.

In syllogism

terms, a circular argument is one where one or more of the premises depend on the

conclusion to be true. Thus, the conclusion can only be true if it is

true, and the reasoning can only be valid if it is valid. The argument does not

show that the conclusion is true. The

only way of breaking the circle is by assuming the truth of the conclusion in

order to justify one of the premises, but that makes the syllogism, which is

designed to demonstrate the truth of the conclusion, utterly redundant since

you already have assumed the conclusion to be correct.

It is difficult to

think of an example of a formal syllogism with circular reasoning, perhaps

because the very structure of a syllogism in some way could be incompatible

with circular arguments (an interesting thought indeed). Colloquial examples

are easier to recognise, but, again, it is tricky to show formally how they are

circular.

An example of a

not-so-formal circular syllogism is:

P: John is a good businessman

P: John has earned a lot of money through

his business

C: Therefore, John must know how to make

good business

So, basically, one

of the things that make John a good businessman is that he is a good

businessman.

It is called circular reasoning because it goes

around and around in its own circle; it leads nowhere. It will not convince

many that John makes good business if someone keep using John is a good businessman as an argument. When they ask why he is a good businessman, he/she

answers: because he makes good money,

and when they ask why is that, he/she

repeats: because he is a good businessman.

Such a

conversation can be incredibly frustrating if the person in question does not

realise his or her argument is circular. Therefore, it would be wise to try to

break it down into components and try to arrange it into something like a

syllogism, to show why the reasoning is circular and thus invalid.

Although they may

appear formally valid, circular arguments are actually not. Their conclusions do not follow from the premises: they are essentially one of their premises,

or at least part of one.

Circular arguments

are common: I know myself to have made quite many. Luckily, they are not hard

to notice – informally or formally. So, as long as you stay attentive to them,

and when faced with one, know how to show that it is invalid, and are prepared

to attempt to reformulate the argument so that it is no longer circular, you should

be just fine.

I have one last

fallacy I wish to point out, but I cannot remember its name. It is not really a

formal fallacy, so its invalidity cannot be clearly shown in a syllogism. It is

when you think you have explained

something by giving it a name. For example, say you ask me how the sun

warms up the Earth, and I reply: “solar radiation”.

This is not a true

logical fallacy, since this type of argument is not always wrong, while logical fallacies are definitely invalid. In

this case, whether the question has been answered depends on the asker’s

understanding of the term ‘solar radiation’: it is all about whether the person

asking the question has enough knowledge on the subject for the explanation to

be sufficient. However, in many cases the explanation might not be good enough,

but the person who confidently says a few fancy words sounds like he knows what

he is talking about, so you accept it rather than look like a fool. Not a good

idea: that person might have just as little a clue of what he is saying as you

do. There is nothing wrong or shameful in asking for a more comprehensive

explanation, especially if it is not your strong subject, so don’t be afraid to

ask!

Next

Now you have been

introduced to the basics of logical reasoning. Hopefully, you understand and

accept that logics aims to produce chains or patterns of reasoning that are

always correct, so that if you start with true statements and reason validly,

the end product will be just as true. However, what I hope more is that you

have asked yourself: ‘well, what does this have to do with science?’ This is a

very appropriate question, because science has a quite different way of

reasoning!

And that is

precisely the point I want to finish this section with: science does not concern

itself with reasoning that is conclusive, that cannot be debated. It can be

said that science only concerns ideas that can be disputed. For example, the

theory of gravity acting on every object on Earth could in theory be disputed if, say, you drop a pen and it does not

fall to the ground. An unlikely event, perhaps, but it means that there are

ways to question the theory of gravity, and that is one of the qualities that

makes the theory scientific: it is testable

(more on that in some later post).

Is this bad, or is it for the better? It means that science is never certain, that scientific ‘facts’ always have the possibility to be incorrect. Bad for science. However, it means that science becomes useful as a method to try to deal with those un-provable ideas, a way to handle the uncertainty, as best we can. Good for humanity.

Is this bad, or is it for the better? It means that science is never certain, that scientific ‘facts’ always have the possibility to be incorrect. Bad for science. However, it means that science becomes useful as a method to try to deal with those un-provable ideas, a way to handle the uncertainty, as best we can. Good for humanity.

Thus, science is

fundamentally rather different from logics. Can they be said to be unrelated?

Both are branches of the philosophy of knowledge, but they deal with different

aspects of the world. But, in many ways, science strives to be based on logical

reasoning. So, in a sense, science uses

logics to answer questions that pure logics cannot! One difference lies in

the start

premises.

While pure logics prefers fundamental premises that are true by definition,

science deals with premises that are mere generalisations.

This will be the

topic of the next section: inductive vs.

deductive reasoning.

Deductive and inductive reasoning are both forms of extrapolation (which was discussed in Part One). Strictly speaking, deduction is the act of reasoning from the general to the particular, while induction is the act of reasoning from the particular to the general. Before you get a headache trying to understand what on Earth that even means, let me talk you through a few simple examples.

Part

Three will

be about practical rather than theoretical issues with inductive reasoning: the

limitations of sense perception –

the link between our minds and the outside world – and why we should have the fallibility of our

senses close in mind when thinking about science, with its particular emphasis

on observing the world.

Part Two: Deduction vs. induction

The first part

introduced the essence of logics, syllogisms and the concepts of validity and

soundness. It was intended to present a fundamental way of thinking

philosophically about big words such as truth and certainty, and to emphasise

that ‘logical’ does not necessarily mean ‘true’. I would strongly recommend that you read Part One before proceeding.

I know it is a long text, but it is also essential to be familiar with the

concepts that are discussed there.

In Part Two, I

want to take the discussion a step away from general philosophy and closer to

concepts more relevant to science: deductive

and inductive reasoning. These are still fundamental to many, if not all branches

of philosophy, but inductive reasoning in particular is central to the natural

sciences.

Deductive and inductive reasoning are both forms of extrapolation (which was discussed in Part One). Strictly speaking, deduction is the act of reasoning from the general to the particular, while induction is the act of reasoning from the particular to the general. Before you get a headache trying to understand what on Earth that even means, let me talk you through a few simple examples.

Deduction is thinking

that if all bananas are sweet, the banana you are about to eat will taste

sweet. Induction, on the other hand, would be the reverse: if you eat a banana

and find it sweet, you can expect other bananas to be sweet as well. In this

case, ‘the general’ refers to all bananas, and ‘the particular’ is the banana

you have or ate.

So, knowing that

the general (all bananas) has a certain feature or attribute (tasting sweet),

you may deduce that the particular

(the banana in your hand) might possess that attribute as well. Conversely,

observing an attribute (sweet taste) in the particular (the banana you ate) may

lead to the inductive conclusion that

that feature may belong to the general (all bananas).

If you are

observant, you may already be wondering: does induction come before deduction

then? How would you know that all bananas are sweet, unless that is a

conclusion you have reached inductively by tasting many bananas? Well noted –

but do not jump to conclusions! Although that may be true in many cases, there

are many exceptions as well. (Trick quiz: was that inductive or deductive

thinking?)

The main type of

deduction that does not rely on induction is based on definitions. A classical example of something that is true

by definition is that all bachelors are

unmarried men. This is because the word bachelor means unmarried man. Thus, if you meet someone you know is a

bachelor (he may have presented himself as such), you can deduce that he (!) is

an unmarried man. This is not because you have met many bachelors and they have

all turned out to be unmarried men, but because if he is not an unmarried man,

then he is not a bachelor (or… maybe he is lying to you).

You may recall

this example from Part One as the first example of a syllogism. I repeat

it here because it is not only a sound syllogism, but also one that illustrates

deductive reasoning:

P: All bachelors are unmarried men

P: Peter is a bachelor

C: Therefore, Peter is an unmarried man

Here, we go from

the general (all bachelors) to the particular (Peter). We know something about

the general, something that applies to all of them; and from that, we reason

that this something is true also for every particular individual or unit in

this general group. We reason from the

general to the particular in deduction.

Recall that

deduction and induction are inferences,

and as such not necessarily true. Again, what we are concerned about is how

we reason when we infer. We want the reasoning to be valid, so we can

be certain that the deductive or inductive conclusion is as true as the initial

premise. In the above example, the first premise is true by definition, and

therefore unquestionable (the only grey area would be a widower, a man whose

wife has died) – unless you want to challenge the definition, but then it

becomes a matter of language, not the nature of the world. The second premise,

however, may or may not be true. As a result, the conclusion is only as certain

as the second premise. If Peter is not a bachelor, then he is either married or

not a man.

Note that that example also can be written as:

P: All unmarried men are bachelors

P: Peter is a bachelor

C: Therefore, Peter is an unmarried man

Or:

P: All bachelors are unmarried men

P: Peter is an unmarried man

C: Therefore, Peter is a bachelor

And so on…

Both attributes

(being a bachelor and being an unmarried man) are mutually inclusive. But there are deductive syllogisms that

are not true both ways, so to speak. Consider an example:

P: All tall people wear hats

P: Gareth is a tall person

C: Therefore, Gareth wears a hat

This cannot be written as:

P: All tall people wear hats

P: Gareth wears a hat

C: Therefore, Gareth is tall

This is because

the key word is not to be. They are not the same. Think about it. The

first syllogism claims that all tall people wear hats (a ridiculous statement,

perhaps, but let us take it as a true fact for the purpose of this explanation),

but that does not imply what the second syllogism states: that all people that

wear hats necessarily are tall. In contrast, bachelors are unmarried men, and as a consequence unmarried men are also bachelors!

Hopefully, you now

understand the basics of deduction, and recognise that it has important

set-backs (think about how much we actually can use deduction for…). Please

have them in mind, as I will now explain induction, and later go on to compare

deductive and inductive reasoning.

I have been

struggling to formulate the following example of inductive reasoning as a

sensible syllogism, but I think the message can go through as a simple

statement anyway: All swans observed so

far are white; therefore, all swans are probably

white. This is a generalisation based on observations of the particular

(the portion all swans that we have observed), which concludes that the general

(all swans that exist) possesses the same traits (colour) as the particular. We

reason from the particular to the general.

(As a side note, I

might add that this particular example, although classical, becomes problematic

when you think about swanlings, which have a dark grey plumage. For that

inductive claim to be accurate, swanlings must be excluded from the definition

of swans, meaning that sub-adults are not considered members of the species,

which further suggests that infants are not humans, and that abortion must be

ethical… Woah, maybe I should calm down a bit there, hahaha! Actually, that

induction can be improved by specifying that adult swans are white!)

Naturally, you

wonder how we can be sure that all swans are white, when we haven’t seen them

all. How can we know there are not

black, blue or pinkish red swans out there?

Of course, we

cannot know that. We can only be fairly

certain, or guess that it is so.

It is simply a logical impossibility to be absolutely certain about a

(non-defining) attribute of a group without having observed every single member.

However, unless we

have a reason to suspect that there may be deviations from the observed

pattern, we can be quite confident that the pattern will hold even for

situations that we have not observed. Still, that is no guarantee, only a probability.

Induction

reasoning may be regarded as the core of

science. Scientists observe features of the world and then, by working out why these features are the way they are,

try to predict what will happen in a similar situation, or, if their

understanding is deeper, predict what can happen if the situation changes. This

is all based on generalising from a limited number of observations about

unobserved events, groups, things, whatever. These generalisations are then

usually tested by observing more examples of the unobserved things, and if the

generalisations appear true, hooray!

The reason

for relying on induction is simply that we cannot observe the whole world. We simply do not have the time

nor the labour to do it. If we want to say something about what we have not yet

seen, we nearly always have to use inductive reasoning, based on what we have

seen so far.

As long as we are

all aware of the inescapable problem of uncertainty in induction

(deduction may very well be uncertain too, but it is at least possible to be

entirely certain of a deductive statement, while absolute confidence in an

inductive claim is unfeasible), as long as we treat those conclusions with

care, this way of reasoning is amazingly

useful, because it allows us to at least approach a truth about something

unknown. Deductive reasoning is limited to what we already know – mostly what

we know because we defined it that way. We will discuss this more later, in the

main comparison between these two ways of thinking.

However, there is one problematic aspect of induction, one that

causes trouble for all branches of science that deal with predicting the future, or understanding the past (e.g. predicting

natural disasters such as climate change or volcanic eruptions; predicting

evolution and genetic changes, including extinction or survival of species;

calculating how much longer our resources will last, and the implications of

their over-use; and all sorts of things that lie at the heart of what can be

seen as one of the main utilities of science).

To illustrate this

fundamental problem with the inductive way of reasoning, let us consider another

example:

P: The sun has

risen every day since recorded history

P: Tomorrow is a

day

C: Therefore,

the sun will probably rise tomorrow

Intuitively, this

seems correct, but it is actually not

even valid. To make this reasoning valid, we must assume that the world

will work the same way tomorrow that it did today and has in the observed past.

In other words, we need to assume that

the universe works in a consistent

manner.

That is a very

intuitive claim, since it seems silly to think otherwise: we have little or no

evidence to suggest that the world will function differently tomorrow, or any

time in the foreseeable future. In the same way, it is against common sense to

think that the universal laws of nature were different in a time before our

own. Scientific evidence suggests that there was a time when there was no sun,

and a time when there was a sun but no Earth. Scientists use other evidence to

predict that the sun will collapse one day, if it runs out of ‘fuel’. Hence the

word ‘probably’ in the conclusion: it safeguards against the chance that the

specific example might change.

Still, what we

assume must assume in order to call inductive syllogisms of that nature valid,

is that the fundamental laws of the universe – the laws about how things exist

and behave – have always been the same, and forever will be. We can accept that

particular examples may be different, but the underlying thought is that the

basic features of the universe are constant. (I am implying that the sun and

Earth are not basic features of the universe.)

But, the problem

is, can we really justify that assumption? Based only on what we have seen in

the past and present, can we justify the

claim that the sun is more likely to rise than not? The painful problem is

that the assumption that ‘what has happened every day until now is likely to

happen tomorrow as well’ can only be justified if we assume that ‘the world

always has and always will work the same way’, which is the very thing we want to show with the first assumption. Thus, we

have one assumption that only can be justified by another assumption, which in

turn is only justifiable by accepting the first assumption. This circular

reasoning is a big bad fallacy, as you may recall from Part One.

I quote a handout

from philosophy class:

If nature is uniform and regular in its

behaviour, then events in the observed

past and present are a sure guide to unobserved events in the unobserved past, present and future. But

the only grounds for believing that nature is uniform are the observed events in the past and present.

We can’t seem to go beyond the events we observe without assuming the very

thing we need to prove – that is, that unobserved parts of the world operate in

the same way as the parts we’ve observed.

This is the

problem with attempting to extrapolate inductively about the past or future: the

necessary assumption cannot be logically justified. Although it seems

intuitive, it is actually a fallacy.

Another reason to

question the validity of the assumption of the universal laws being constant

across time is that scientists also seem to hold the belief that everything is constantly changing.

Unless they mean ‘everything except the

universal laws is constantly changing’, we are looking at a troublesome inconsistency here (inconsistency, in

the philosophical sense, is holding

beliefs that contradict one another). The universe cannot be the same and, at the

same time, change, since remaining the same is the same thing as not

changing, by definition. One of those beliefs must be discarded (except if the

constancy solely refers to the universal laws, and the change refers to

everything else), which is tricky since both ideas are rather intuitive. We see

things changing with time every day, every week, every month, etc. But we also

see that many things stay the same.

Still, even though

there are logical reasons for doubt, things that science has accomplished,

using those very methods, seem (!) to have hit something right somehow.

Although the reasoning seems limping, it appears to have reached the goal.

Either, the assumption that the world works in a consistent way just happens to be true (note that

circular reasoning does not imply falseness: that an argument is circular only

means that is cannot be shown to be true or false, but it has no

bearing on whether it is actually true or false – it is either true or false or

somewhere in between, but it cannot be demonstrated logically, and is therefore

not good reasoning, even though it might be correct, just by luck!), or it is almost true, or it simply does not

matter whether it is true or not, and the logical analysis of the flaws of the method

is wrong somewhere.

In Notes from Underground, Fyodor

Dostoyevsky writes that “man has such a predilection for systems and abstract

deductions that he is ready to distort the truth intentionally, he is ready to

deny the evidence of his senses only to justify his logic”. I interpret it to

be questioning the assumption (really) that our logic has anything to the

natural world. How can we know that logics really describes how the world

works, and not just the way we humans think? This is a

really interesting topic, one which I hope we will come back to later.

But, now I think it is about time we get on with comparing deduction and

induction.

In general, it can

be said that deduction is more certain

but less informative, while induction is less certain but more informative.

(Or, really, one should say that deduction has greater potential of preserving the certainty of its

premises, while a great deal of certainty may be lost through inductive

generalisation.)

This is really

because of the nature of these ways of reasoning. When you argue from the

general to the specific, you just apply what you know about a group to a

particular member of that group. If I know that most rocks are hard, I can be

fairly sure that if I touch a rock, it will feel hard. In contrast, when you

argue from the specific to the general, you are dealing with the uncertainty of

whether the feature you are reasoning about applies to the whole group or not.

In deduction, you know it applies

throughout the group, and can therefore be confident that any particular member

will also possess that feature; but, in induction, you cannot be sure that the

feature is something that applies to the whole group based on only one or a few

particular examples. Although many rocks are grey, it happens to be that colour

is not consistent among rocks – there are many rocks that are red, white,

beige, green, black, etc., and many have multiple colours!

But, I hope you

realise that you cannot reason deductively without knowing anything about the

whole group! Therefore, deduction is really not very informative at all.

Deduction can only tell you things about particular examples from a broader

category of which you already have a lot of information, and the information

you apply to these particular examples is already known. Deduction is a way of

inferring information from broad category to a narrow part of that or a similar

category, rather than discovering new information. It is a tool of inference,

not investigation. Deduction is nearly useless in the face of an unknown group

of things.

Induction is the

method of choice when you want to learn about something new. By making

(careful) generalisations about the whole group from a limited sample, it

provides a platform for beginning to

study this group. These generalisations are usually checked by observing

additional examples from the category, and, if the generalisations seem to

hold, you keep looking for more particular examples; if you find that there are

too many exceptions to your generalisation, you should reconsider or discard

that idea and start over, drawing new generalisations from the examples you

have now studied. (This systematic approach is not part of the essence of

induction, but rather the way it is used effectively in science to learn about

the unknown.)

While induction

makes it possible to explore, deduction cannot do much more than point.

(But, note well

that induction does not allow us to explore completely

unknown situations. We always need at least one example of the particular

to make generalised or inductive statements. When faced with the complete

absence of specific examples, loose inference based on educated guesswork is

probably the best we could do. Ideally, these guesses should assume the same

predicting nature of inductive generalisations: the guesses should encourage

explorers to test the ideas, by finding examples of that unknown, and comparing

the guesses with observations.)

As already

mentioned before, deduction is often

preceded by inductive investigations. Since deduction can only preserve certainty, never increase it,

it follows that deductive conclusions made from inductive premises is only as

certain as those inductive claims. This is a practical limitation of deduction.

However, induction

alone does not take us far. It can help us learn about a category of entities

in the world, but that is where it stops. Deduction

is one way of making use of information gained from induction, by allowing

us to apply the general knowledge to specific situations. If we have observed

the consequences of earthquakes and learned about it through induction, we can put

this general information to use by applying it deductively on earthquakes we

experience now, and better know what to do when they occur and how to act after

they have passed.

In other words,

deduction and induction seem to come hand in hand, combined, in practice,

although they are each others’ opposites, theoretically.

The final point I

want to make in this comparison is about the role of language in deduction and induction. In one (philosophical)

sense, language is based on inductive generalisations: we implicitly organise the

world into general categories by putting names on them, based on common

characteristics. We notice that some things have much in common with each

other, but still are different from everything else, and call them something

unique, e.g. dog, table, teacher. Dogs bark, but neither do tables, teachers or

anything else, tables have a specific shape and function that make them tables,

etc.

We also implicitly

use deductive reasoning by expecting similar behaviour from members of the same

‘thing, which may be distinct from other ‘things’. For example, if you punch a

dog, it will probably feel pain, and maybe bite you; if you punch a table, the

table might take damage, but will not react, and you will surely hurt yourself;

if you punch a teacher, you are in big trouble.

In that sense, by

using language, you are implicitly also using deductive and inductive

reasoning, often combined.

What is more

important, however, is that deduction and induction effectively depend on that the world is organisable into

discrete categories, i.e. things/words. What I am trying to argue is that

language is not only a way of communicating ideas; language is also a way of organising the world into discrete categories,

thus making deductive and inductive logics possible. Deduction is the art of

reasoning from such categories to their specific members, and induction is the

art of reasoning from specific members to broader categories. If the world is

not organisable into discrete groups, such reasoning falls apart.

That is the main

reason why many modern taxonomists (scientists who classify living organisms)

are struggling with distinguishing different species. The more the biologists

learn about the diversity of living organisms, the more they realise that the

concept of a ‘species’ is more a made-up grouping than a real, natural unit.

There is so much continuous variation within and between ‘species’ that the

scientist must make rather arbitrary decisions on how to decide the ‘limit’

between closely related ‘species’. This is just one example of humans trying to

impose order on something that apparently is not meant to be organisable.

I admit that this

discussion might have been rather confusing, but I hope at least the general

message has come across. Scientific research is based mainly on inductive

reasoning, or generalisations, basically; scientific inference, on the other

hand, relies on deduction, the opposite of induction. Deduction is the art of

inferring from the general to the particular, while induction is about generalising

from the particular to the general. Induction is less certain that deduction,

but much more useful when it comes to investigating the world. Both are often

used in combination, however, as they are not very meaningful in isolation.

No comments:

Post a Comment